Many FP&A teams have built systems that can answer almost any question about their business. The data is mapped, the logic is defined, and the models work. Yet people still spend a surprising amount of time trying to pull information out of those systems because the path to them is wrapped in tools and navigation and shared tribal knowledge. This friction is quantifiable: a 2024 McKinsey survey found that 85% of finance leaders are now prioritizing AI specifically to reduce this kind of manual analysis load.

You see it every month. Someone wants to understand a dip in margin, or test what next year looks like if hiring slows down, and suddenly they’re bouncing between dashboards, views, and Slack threads. There are too many hoops to jump through between the question and the insight.

Natural language interfaces can help by providing a simpler way to access and leverage the models finance teams already trust. You ask a question the way you would ask a colleague, and the system reaches into the right structures, pulls the relevant figures, and explains what it found in the context you care about. This is all done without specialized navigation, guesswork, or waiting for someone who “owns” that part of the model.

It feels like a small improvement on the surface, but it fundamentally changes how people interact with planning systems. It means less time spent retrieving the answer and more time spent understanding what the answer actually means.

Why this moment matters

The idea of asking business questions in plain language is not new. What is new is that the underlying pieces have finally matured enough to make it practical. Large language models can now interpret the intent behind a finance question without being thrown off by phrasing or context. Planning and analytics platforms expose APIs, metadata, and semantic definitions that let an LLM retrieve governed numbers instead of guessing. Teams have spent years curating their models, hierarchies, and calculations, which means the structure needed for reliable responses is already in place.

Finance work is also shifting in a way that makes natural interaction more valuable. Most questions are exploratory, not scripted. Analysts want to test ideas quickly and move between scenarios without stopping to rebuild reports. Leaders want context around a number, not just the number itself. The traditional interface forces people to click through views, drill into hierarchies, and track down the right report, and it slows the process down.

Natural language becomes useful in this environment because it meets people where they are. It reduces the “interface tax” that comes from navigating complex systems and allows the planning environment to respond to questions directly.

This shift is meaningful because it expands access. People who understand the business but do not spend their days inside the planning tool can still reach the information they need. Analysts who already know the system well can move faster and explore more. And as teams become comfortable asking questions through a conversational interface, they build habits that make more advanced capabilities like scenario exploration, recommendations, or automated actions much easier to adopt. This aligns with broader industry shifts; Gartner predicts that by 2027, 75% of new analytics content will be consumed via these contextualized, generative interfaces rather than static dashboards alone.

How natural language complements traditional finance tools

Finance teams rely on a set of structured tools that have served them well for years. Dashboards, cube views, reports, and Excel models create consistency, enforce definitions, and anchor the rhythms of planning and forecasting. These tools aren’t going anywhere, nor should they. What changes is how people reach and interpret the logic within them.

Natural language interfaces sit alongside these established tools and open up a different entry point into the same environment. They help when the question does not fit neatly into a predefined report, or when someone wants to explore an idea before deciding which view or metric to study more closely. Instead of navigating through layers of structure, the user can begin with the question and let the system interpret the intent.

As Zwingmann, Marar, and Southekal outline in their 2025 report, this workflow mirrors the relationship between Generative and Classical AI. They argue that Classical AI provides the precision, governance, and rule-based logic required for critical business decisions. Generative AI provides the flexibility, interpretation, and communication layer on top of that logic. In their report Beyond the GenAI Buzz: Why Classical AI Still Powers Critical Business Decisions, the authors emphasize that “Generative AI and Classical AI can complement and even validate each other’s outputs,” and emphasize that “it is not Generative AI or Classical AI; it is Generative AI and Classical AI” (Zwingmann, Marar, Southekal, 2025).

Users can move through a line of inquiry conversationally, ask follow-up questions, shift perspective without rebuilding a report, and surface explanations that are often buried beneath layers of detail. The data, definitions, and calculations remain governed by the planning system. The natural language layer simply makes them easier to access and understand.

Seen this way, natural language is not a replacement for dashboards, reports, or any other structured tool. It is a complementary mode of interaction that broadens how people work with the same underlying models. The combination helps teams explore ideas more fluidly, interpret results more clearly, and connect the logic of the plan to the decisions that follow.

A new workflow: from the question to the action

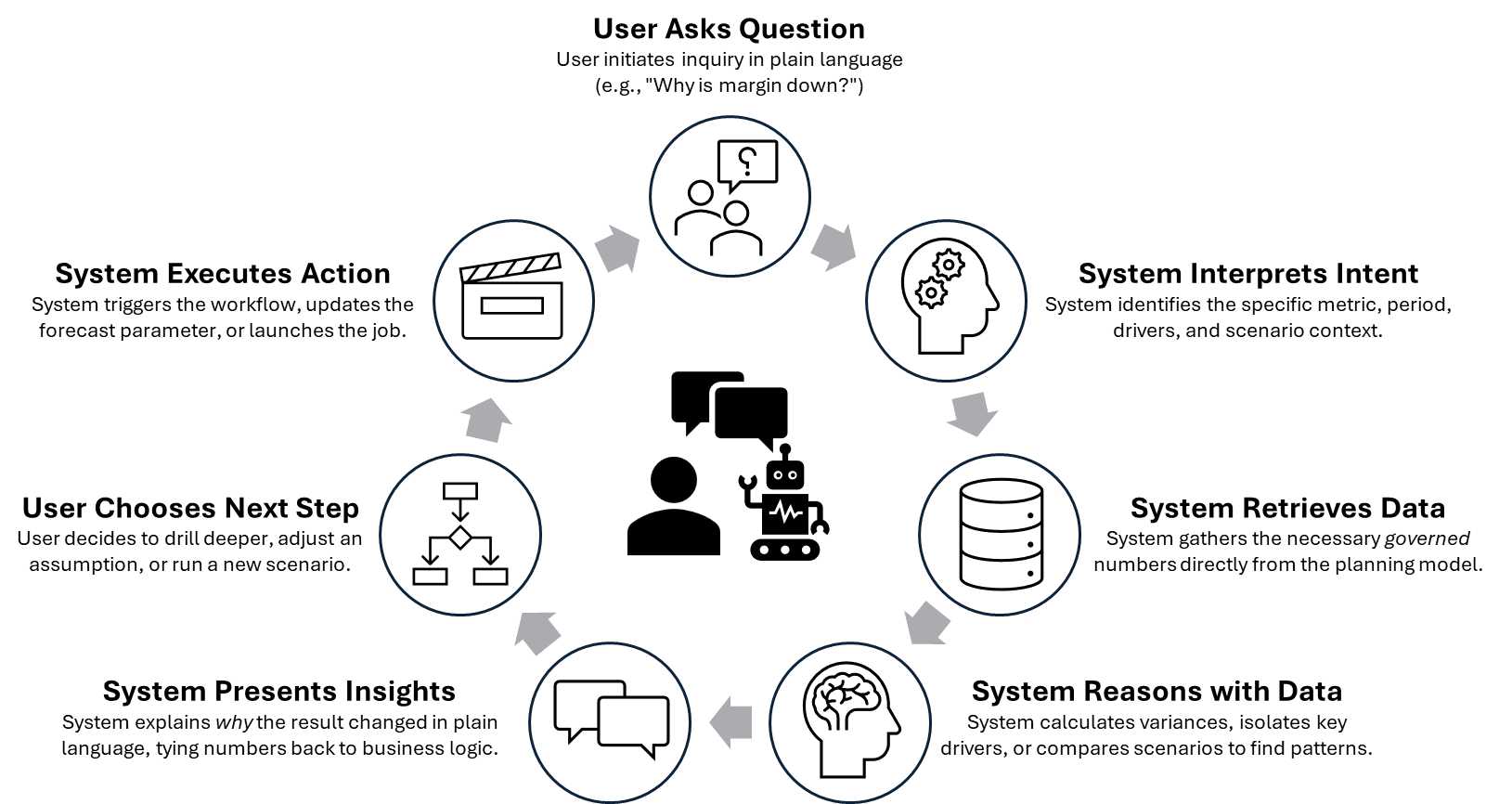

Natural language changes how people move through the planning environment. Instead of starting with navigation, filters, or predefined structures, everything begins with the question a user wants to explore. The interface interprets what they mean, retrieves the governed information from the model, reasons with that information, and presents an explanation that makes sense in context. If the user wants to take the next step, for example to adjust an assumption, run a scenario, or trigger a workflow, the system can do that too.

The diagram below shows this cycle at a high level.

The value of this loop is that it mirrors how finance teams actually think. Questions lead to insights, insights lead to decisions, and the system stays with the user through each step rather than forcing them back into the tool to rebuild the path manually.

Guardrails: what has to be true for natural language to work

Natural language only works if the underlying structures are solid. The interface can interpret intent and generate explanations, but the answers still come from the planning model. That means the definitions, rules, attributes, and security must be consistent. The semantic layer has to be clear. The APIs that connect the interface to the model need to be reliable. And teams need visibility into how prompts are handled and how outputs change over time.

This is the same foundation finance teams already depend on for reporting, forecasting, and scenario work. Natural language simply makes the quality of that foundation more visible. If a metric definition is unclear or a driver is misaligned across scenarios, the conversational interface can surface that inconsistency faster. The guardrails are the same ones you would expect in any governed planning environment. The difference is that the interface makes them matter earlier in the process.

The value: reducing the distance between the question and the decision

The real value of natural language is not in the novelty of asking a model to explain something. It is in how much friction it removes from everyday planning work. People spend less time finding the right place to look and more time thinking about why the result changed. They can follow ideas without rebuilding views. They get explanations that tie the numbers back to the logic inside the model. And the system stays with them as they move from question to insight to action.

This shift is critical for preserving quality. Harvard Business School researchers recently warned of a "convergence to the bot view" where purely AI-driven analysis becomes generic. By using natural language to handle the retrieval, finance teams free up their cognitive capacity to do the actual thinking, ensuring they add the unique human insight that the model might miss.

What comes next: from conversation to coordination

Natural language helps people get answers and understand the logic behind them. The next step is using the same intent to coordinate work across systems. Instead of asking for an explanation or a driver change, a user might ask the system to launch a scenario workflow, update a cost forecast, route an approval, or synchronize data across tools.

That is where agentic workflows come in. Natural interfaces help people ask better questions. Agentic systems help the organization act on those answers. We are already seeing the signals of this shift. Gartner predicts that "Agentic AI" will move from handling 0% of work decisions today to 15% by 2028.

In the next article, we’ll look at how these agents connect the planning environment to the rest of the enterprise stack to automate the handoffs that still slow teams down today.